When I wrote about ChatGPT back in January, I chickened out. I stayed away from the tougher questions AI poses. Why? Because I’m a chicken, and chickens don’t cross roads — especially not AI crossroads!

Ok but really, I shied away from that discussion because I was having fun playing with ChatGPT. More fun – I promise you – than thinking existentially about AI endgames. And also, I’m not an AI expert, and none of my readers (so far!) are policymakers or tech CEOs.

But now we have to revisit it, because we are at a crossroads for AI. The US government and tech leaders are voicing their opinions on the direction of this new tech, and I wanted to write to clarify my own thinking.

Let’s dive in.

Recent News

AI “Pause” movement: In March, 1000+ tech leaders and researchers signed an open letter calling for all AI labs “to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.” Elon and Steve Wozkiak (Apple co-founder) signed it. They are concerned we’re moving too fast and ignoring the risks. More on that here from TechCrunch.

Regulatory scrutiny: Wednesday, FTC Chair Lina Khan published a NY Times op-ed saying AI could turn tech incumbents into AI monopolies, fuel fraud via AI-crafted messages, perpetuate bias if trained on biased data, and incentivize even more data collection and user privacy invasion. She promised the FTC would use all its powers to prevent those bad outcomes.

Executive action: Thursday, The White House announced new actions to address AI innovation and protect constituents. They’ll invest $140M in National AI Research Institutes for the public good, perform public assessments of existing AI systems (including Google, Microsoft, and OpenAI), and set a framework for the US Government to invest in and use AI.

What all these groups seem to be saying is, “let’s go slow”. But I think it’s worth a debate, and I’m personally torn. Here’s my view, in a nutshell:

Geopolitically and economically, we should go fast.

Existentially and socially, we should go slow.

Understanding the Stakes

Geopolitically

Geopolitics can be boring. Let’s spice it up. Let’s pretend there’s a world with three equally powerful kingdoms, and they’re locked in a race to develop a new weapon. Whoever finishes first can take over or destroy the others. If you’re one of the kings, how could you protect your kingdom?

You could cooperate, organizing alliances. The alliance could be to speed up R&D and share success three ways, or to collude against one king, or to stop the weapons research completely.

You could ignore alliances and go all-in on R&D, clawing your way to finish first in the race. That way, you guarantee safety for your kingdom.

If this reminds you of the race to develop the A-bomb – I think that’s exactly the right comparison. I’ve written about the US-China rivalry before, and I’ll borrow from my TikTok article to make this point:

The US and China are battling for global supremacy. Having the best technology firms in the world isn’t only important for bragging rights. Weapons systems, surveillance capabilities, and other military applications make tech leadership critical. Not to mention the huge GDP growth that a booming tech sector can help fuel.

Being first in AI has military and GDP implications that make it really important to win. Since relations between the US and China are tense right now, and one of us is more likely to win than any other country, alliances aren’t a likely possibility. So in response to the pause movement, at a country level – absolutely not.

Existentially

Nick Bostrom is an AI researcher. His most popular book about AI, Superintelligence, has been recommended by everyone from Elon to Bill Gates. I don’t agree with all his conclusions, but I have learned from his thought process. Here’s how he thinks AI might threaten humanity existentially (oversimplified here):

AI advancement is real. Eventually, a Super AI will do everything better than humans.

When the Super AI is already better than us, it will be impossible to add safeguards.

Without safeguards, the Super AI could destroy life as we know it, either:

Accidentally – pursuing goals we sloppily set for it

On purpose – pursuing its own goals

It’s helpful to have an example, so I’ll borrow from one in the book. Imagine you’re the CEO of a paperclip company and you magically have the first Super AI. Congrats! If you tell the Super AI to help you maximize paperclip production, it might take the following steps all on its own:

Tweak the production schedule to eek out more paperclips. Nice!

Send emails, sign contracts, and send payments for new factories. Expansion!

Replace most humans in the factories with robots to improve quality. Ok, fair.

Hook-up remaining humans to life support so they don’t need sleep or breaks. Hang on, what?

Cover the Earth in factories, crowding out agriculture and food production. Wait, stop!

Start mining in space for more metal to keep up production. Nooo!

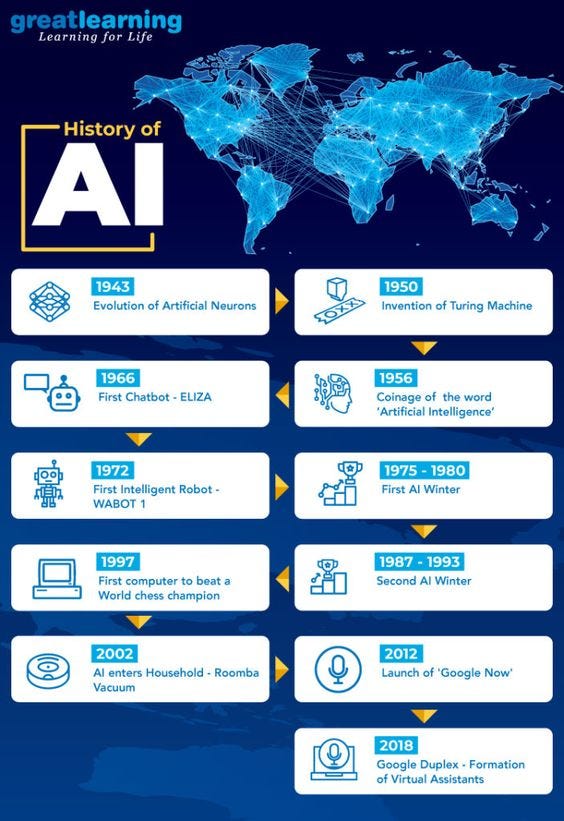

You get the picture. I don’t 100% buy this slippery slope argument, but it has elements of truth. In the few years of programming I’ve done, one major takeaway is that computers only do what you say to do, not what you intend them to do. So if we are rushing and trying to be first, and we accidentally give it the wrong goal – that feels risky. And compounding that risk is another takeaway from Bostrom’s book – the latest AI models are so much faster than us at learning. AI could quickly skip from something much dumber than us to something much smarter than us. Just look how AI has accelerated over the last 80 years:

Bostrom advocates that we put in reliable safeguards way before we think we need to, and I agree with him.

Socially

Let’s assume for a minute that we are safe from existential disaster… are there other social impacts to worry about? Yes, definitely. Here are a few categories and examples:

Training data bias – if an investment bank uses an AI hiring algorithm trained on historical hiring data, it might just continue hiring white dudes with slick haircuts

Polarization – if news recommendation algorithms can keep you engaged by showing you divisive political trash, we might get pushed further and further into our party’s corner

Device Addiction – If AI-based scrolling video apps are distracting enough to make hours feel like minutes, we might be missing out on social connections and further shortening attention spans

Aside from these risks, AI is also socially challenging when it changes the jobs humans do. We’re already seeing this hit tech and traditional industries. IBM’s CEO this week announced he expects to replace 30% of back office roles with AI in the next five years. And the Writers’ Guild of America (WGA) is on strike this week – with AI on the agenda.

According to CNN, the labor union said AI should be regulated so it “can’t write or rewrite literary material, can’t be used as source material” and that writers’ work “can’t be used to train AI.” Working through these social challenges will take time. So this is another reason to advocate for going slow.

Economically

Things are mixed economically. On the one hand, we will probably see negative economic outcomes for individuals that lose jobs to AI. In aggregate, there could be other negative economic effects. As Lina Khan rightly points out, we might see too much monopoly power for incumbents lead to higher prices for consumers, SMB’s driven out of business, etc.

On the other hand, there are positives in aggregate too. The revenue prizes for the firms that win (and the GDP boost for the countries that win) are incredibly tempting. For me, the economic benefits – especially in the long-term – outweigh the short-term costs.

So What

Weighing these competing forces isn’t easy, but I personally think we should be going fast and avoiding a pause. A pause hinges on having a tech-savvy, well-equipped government, coordinating activities with other governments, with monitoring and enforcement capabilities in place. We don’t have that.

Tech savvy, well-equipped government

The US does not have a strong track record of understanding and legislating tech. As I discussed in relation to Apple in a prior article – bi-partisan legislation to reign in big tech repeatedly failed to pass in the US. Separately, from “FTC 101”, we know the FTC doesn’t have the budget to police big tech firms on simpler issues with smaller stakes. Regulating AI will be even harder. As noisy as the FTC and the White House have been about AI this week, I think it’s more bark than bite.

Coordinating activities with other governments

Every country is taking their own approach to AI and tech regulation. For example, the UK leaped (leapt? who cares?!) ahead and passed user privacy laws in 2018, which we still don’t have in the US. The UK also passed sweeping big tech legislation a few years ago (in comparison to the failed US effort). And Italy is among several countries that banned ChatGPT outright, while in the US everyone is trying it out. Countries are far from being on the same page.

With monitoring and enforcement capabilities

Even with a global alliance, monitoring and enforcing nuclear disarmament has been incredibly hard. And those are huge production facilities requiring lots of physical space. With AI, a small team of software experts can build algorithms nearly anywhere. Monitoring and enforcing an AI development pause isn’t possible.

With all that said, I hope (and expect) the government and certain tech groups will keep taking steps to mitigate the social, existential, and economic risks AI poses.

I am worried about the existential risks in particular, but I think one benefit of AI going viral and tons of people playing with ChatGPT is that it also serves to sound the alarm early. My view is that we are a few decades away from a Super AI, so this early alarm will give us time to build those necessary safeguards. And I hope that other tech leaders – for example China and Europe – will coordinate on that. But I think they are more likely to coordinate if we are in the lead, or if we are at least at parity with their capabilities.

So in the meantime… like Ricky Bobby said in Talladega Nights: “I wanna go fast.”